1 Introduction

This article refers to the address: http://

Since the beginning of the 1950s, the research on speech recognition has begun to reach a certain height after several decades of development. Some have gone from the laboratory to the market, such as some toys, some departments, cipher voice input, etc., along with DSP and The development of ASIC technology, fast Fourier transform, and recent research on embedded operating systems have made it possible to identify specific people, especially those with small computational complexity. Therefore, the research on the application of specific person speech recognition technology in automobile control is very promising.

2 Specific person speech recognition method

At present, commonly used speaker recognition methods include template matching method, statistical modeling method, and connection method (ie, artificial neural network implementation). Considering the problem of data volume, real-time and recognition rate, the author uses a combination of vector quantization and hidden Markov model (HMM).

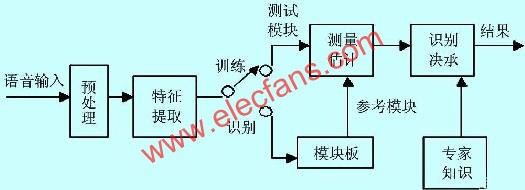

The speaker recognition system is mainly composed of a speech feature vector extraction unit (front end processing), a training unit, a recognition unit and a post-processing unit, and its system configuration is as shown in FIG. 1 .

Figure 1 system composition

It can also be seen from the above figure that each driver must input his own voice into the system after purchasing the car, that is, the training process. Of course, it is better to be quiet and the number reaches a certain number. From then on, you can use this system in the future driving process.

The so-called preprocessing refers to the special processing of the speech signal: pre-emphasis, framing processing. The purpose of pre-emphasis is to raise the high-frequency portion and flatten the spectrum of the signal for spectral analysis or channel parameter analysis. This is achieved with a pre-emphasis digital filter with a 6dB/octave boosting high frequency characteristic. Although the speech signal is non-stationary and time-varying, it can be considered as a local short-term stationary. Therefore, speech signal analysis is often processed in segments or frames.

2.1 Speech feature vector extraction unit

The fundamental problem in the design of the speaker recognition system is how to extract the basic features of the person from the speech signal. That is, the extraction of the speech feature vector is the basis of the entire speaker recognition system, which has an extremely important influence on the false rejection rate and the false acceptance rate of the speaker recognition. Unlike speech recognition, speaker recognition utilizes speaker information in a speech signal, regardless of the meaning of the words in the speech, which emphasizes the personality of the speaker. Therefore, it is difficult for a single speech feature vector to improve the recognition rate. The system uses cepstral coefficients plus gene cycle parameters in speaker recognition, and only cepstral coefficients are used in speech recognition of control commands. Among them, there are two commonly used cepstral coefficients, namely LPC (Linear Prediction Coefficient) and Cepstrum Parameter (LPCC), and one is based on the Mel scale MFLL (Frequency Cepstral Coefficient) parameter (Mel frequency spectral coefficient).

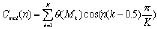

For the extraction of LPCC parameters, the Durbin recursive algorithm, the lattice algorithm or the Schur recursive algorithm can be used to find the LPC coefficients, and then the LPC parameters are obtained. Let the LPC coefficient of the first frame speech be αn, then the parameters of the LPCC are ![]() 1<n≤p

1<n≤p

Where p is the order of the LPCC coefficients and k is the number of recursive times of the LPCC coefficients.

Further research has found that the introduction of first-order and second-order differential cepstrums can improve the recognition rate.

For the extraction of MPCC parameters, if the spectrum of the speech signal is divided into K bands according to the Mel curve, and the energy of each band is θ(Mk), the MFCC parameter is  1<n≤p

1<n≤p

Through the experimental comparison of the influence of LPCC and MFCC parameters on the recognition rate, the author selects the LPCC parameters and their first-order and second-order differential cepstral sparse as feature parameters.

There are many methods for estimating the pitch period, mainly based on the algorithm for finding the short-time autocorrelation function, the algorithm based on the short-term average amplitude difference function (AMDF), the algorithm based on the homomorphic signal processing and the linear predictive coding. The author only introduces an algorithm based on the short-time autocorrelation function.

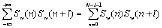

Let Sw(n) be a windowed speech signal whose non-zero interval is 0<n≤n-1. The autocorrelation function of Sw(n) is called the short-time autocorrelation function of S(n) of the speech signal, which is represented by Rw(l), ie Rw(l)=  It can be seen that the short-time autocorrelation function is the largest at Rw(0) and has a large peak at each integer multiple of the pitch period. Select the appropriate window function (Hamming window with window length of 40ms) and filter (bandwidth is After the bandpass filter of 60~900 Hz), the pitch period can be estimated by finding the position of the first largest peak point of the autocorrelation function and calculating its distance from the zero point.

It can be seen that the short-time autocorrelation function is the largest at Rw(0) and has a large peak at each integer multiple of the pitch period. Select the appropriate window function (Hamming window with window length of 40ms) and filter (bandwidth is After the bandpass filter of 60~900 Hz), the pitch period can be estimated by finding the position of the first largest peak point of the autocorrelation function and calculating its distance from the zero point.

2.2 Training unit

The function of the training unit is to use a certain algorithm to train the previously collected speech for each speaker to be identified to match the parameters. For the different requirements of speaker recognition in automotive applications, the training unit is also divided into two parts: training for speaker recognition and training for words to be recognized.

For the training of the speaker recognition part, training the characteristics of the speaker, establishing one or more sets of HMM models for each legitimate user, and using a vector quantization (VQ) based method to establish a VQ codebook for each legitimate user. . The VQ codebook is designed using the LBG algorithm, and the initial codebook is set using the splitting method initial codebook.

Part 2 establishes multiple training samples, or term samples, for each orphaned term used in the control command to estimate the HMM parameters (one or more sets) of the term. A complete description of a HMM process includes: 2 model parameters N and M, 3 sets of probability metrics A, B and π. For convenience, a complete model is usually represented as follows: λ = (N, M, π, A, B), or abbreviated as: λ = (π, A, B). For the model parameter of each term V, V=1~V, the Baum-Welch re-estimation method can be used.

2.3 Identification unit

The function of the recognition unit is to identify the speaker to be recognized and estimate the control command word string to be recognized under certain judgment conditions by using the trained HMM model parameters and the measured pitch period of the speaker. The decision condition usually adopted for the HMM model parameters is the maximum posterior probability, which is implemented by the Viterbi algorithm.

2.4 Post-processing unit

The recognition rate of the system is improved by making full use of the vocal parameters of each speaker and the probability distribution of each state duration in the vocabulary.

3 system implementation

Since the control command of the car is a combination of a limited number of terms and a string of numbers, the recognition of these voice commands belongs to the identification of the connective words of the specific human small vocabulary and the speaker confirmation related to the text, whether from the current DSP operation speed. In terms of storage space, real-time recognition of these voice commands is entirely possible.

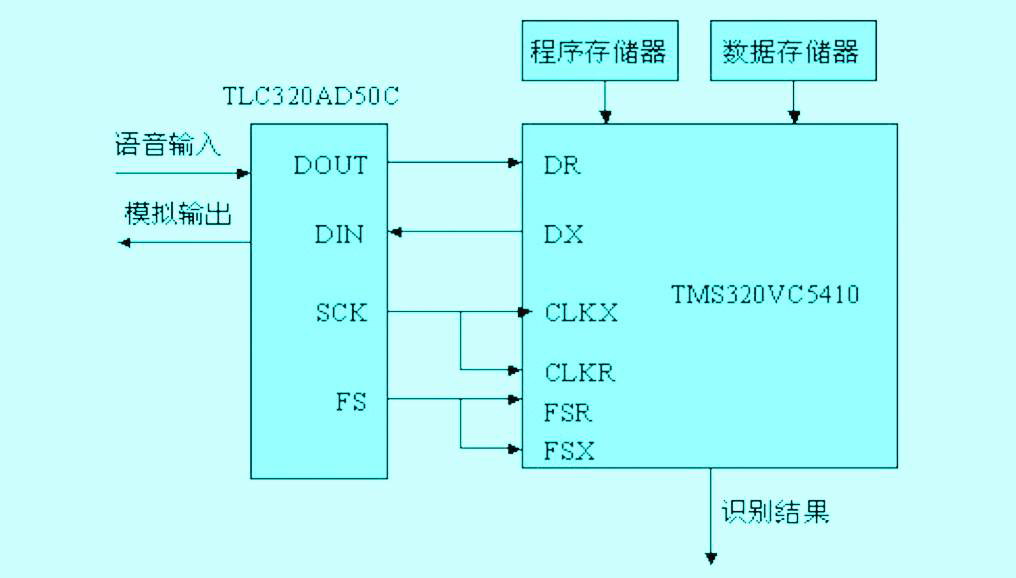

The block diagram of the identification system is shown in the figure: In this system, the speech recognition part that requires very high computing power and storage unit is completely completed by the DSP.

Figure 2 Block diagram of the identification system

The function of the identification system in the block diagram is to complete the input, A/D conversion and recognition of the voice. The core part of the system adopts TMS320VC5410. The reason is that its operation speed and storage space can meet the requirements, and some of its parallel computing hardware structures are also very suitable for various algorithms of speech recognition. The program and the HMM parameter table that has been trained offline and the corresponding dictionary are stored in In the program memory, the data memory stores intermediate calculation data in the recognition process. The A/D chip uses the TLC320AD50C, which contains A/D, D/A, and low-pass filter and sample-and-hold circuit. The input of the analog voice signal is mainly through the microphone to ensure the security of the voice access control, and the converted digital voice data is transmitted to the DSP in synchronous serial communication. As shown in Figure 2.

4 Conclusion

Voice-controlled cars are a trend in the future. At present, the application of voice technology to automobiles is only used in some toys, and thus the field of car control using voice technology has a considerable potential market.

Moreover, speaker recognition technology has been developed to be applied to the actual stage, but currently there are not many applications for speaker recognition. The author tries to propose a solution that is easier to implement and applies speaker recognition technology to reality. However, in practical applications, the speaker recognition system faces a common problem, that is, it cannot distinguish whether a pronunciation is live or recorded. In response to this phenomenon, the speaker recognition system proposed by the author can effectively prevent this from happening. When the speaker recognition system is specifically implemented, random or other methods may be used to generate the prompt text. Such as a random number string, so that the impersonator can not record in advance, increasing the safety of driving.

As users hands or debris enter the zone 6 inches (15cm) from the infrared sensor on top of the dustbin, the lid will automatically open

Rectangular Sensor Automatic Dustbin

Rectangular Sensor Automatic Dustbin

NINGBO ZIXING ELECTRONIC CO.,LTD. , http://www.zixingautobin.com