Lei Feng Network (search "Lei Feng Network" public number concerned) by: This article translator Qu Xiaofeng, Hong Kong Polytechnic University, doctoral students in biometric identification. The article was translated from Jono MacDougall's How Magic Leap Works - From Field of View to GPU . Has been authorized by the Jiangmen entrepreneurship (thejiangmen).

Image Source: fastcompany

Magic Leap has always kept the details of their technical work confidential. We now only know that their system is a completely new system and far surpasses all existing competitors that consumers are familiar with. It is no wonder that Magic Leap wants to keep his revolutionary system secret. Many companies are looking for opportunities to pry into, and want to understand what technology is, so that people are so excited.

This kind of technology sounds like the kind of potentially revolutionary "new things" that Apple really wants to have. It's what Microsoft is trying to achieve on Hololens, but it is far from being realized. It was inspired by Google Glass but it was clearly ahead of the generation.

What exactly is this technology and how does it work? I investigated the speeches, patents, job applications, and their backgrounds performed by people working at Magic Leap, trying to find answers to this question.

In general

This is regular size glasses. But where does the camera go? Note: This is not Magic Leap's product.

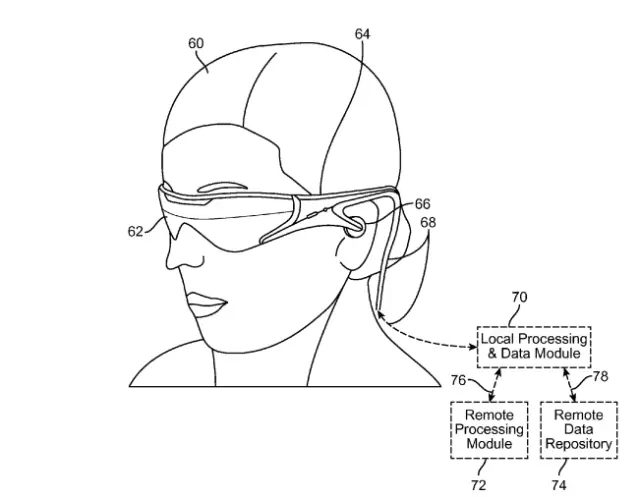

Before we talk about the details, we first talk about what kind of technology this is. Simply put, Magic Leap is making a device that can project objects onto the person’s field of view, and it is far more real than other similar devices we see today. Magic Leap's device consists of two parts: a pair of glasses and a portable pocket projector/calculation module. This module is a cellless cuboid with almost no screen size. This portable computing module connects the glasses through the data cable. Glasses and ordinary glasses have a similar size and structure, perhaps a little fat. Small size is an important feature of the product. The small size means that the product can be worn in social situations and may be as portable and easy to use as a smart phone.

Portable Projection and Calculation Module

As shown in the figure, a charging pouch similar in size to the Magic Leap portable module.

Magic Leap's most prominent is to be able to remove a large part of the necessary hardware equipment from the glasses body, placed in a separate module. HoloLens, on the contrary, significantly reduced the size of the components in the headset, but only to the extent it is today. What is inside the portable module? There may be the following sections:

battery

The capacity of this battery is about the same as today's smart phones, and perhaps more. If you want to replace the smart phone, it is quite a big battery. It is estimated that at least 5000 mAh will be at least.

CPU/GPU

Definitely use the latest generation of mobile CPUs. It is estimated that Qualcomm will be used. Fortunately, they shouldn't use high-end graphics because Mixed Reality MR only needs to render the parts without rendering the entire scene. This avoids the high-intensity graphics processing that Virtual Reality VR requires.

RAM

Similar to smart phones, estimated at 3-4GB.

Customized SLAM chip

This is necessary to put virtual objects into the real world. They may tape out themselves or use Movidius or other similar chips.

4G/Wifi/Bluetooth

SIM card

GPS chip

camera

There must be a bunch of cameras on the glasses, but this does not mean that the portable module can be gone. SLAM cameras on head-mounted devices are different from ordinary digital cameras. The glasses are limited in size and may not allow a high-power camera to fit into the portable module. The advantage of this design is that it can reduce the concerns of others about privacy violations. Without a portable module, only glasses can't be photographed.

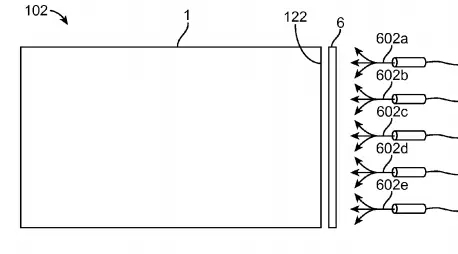

Laser projector

This is the most important innovation of the device. The projection system was removed from the glasses and moved to the portable module, resulting in a significant reduction in the volume of the product. The projected light is generated by the portable module and then transmitted through the optical fiber to the headset. We will analyze the working principle in detail later.

When we put everything into the portable module as much as possible, what's left on the glasses? The following components are installed on the glasses:

Inertial Measurement Unit (IMU)

Is a common acceleration sensor, gyroscope and compass.

headset

Maybe use bone conduction headphones on Google Glass. It depends on whether their design ideas are in harmony with the body. The advantage of bone conduction is that you can hear the music played by the headphones while hearing other sounds.

microphone

Optical section

camera

The optical part and the camera are the most interesting components. Let's analyze it in detail.

Optical section

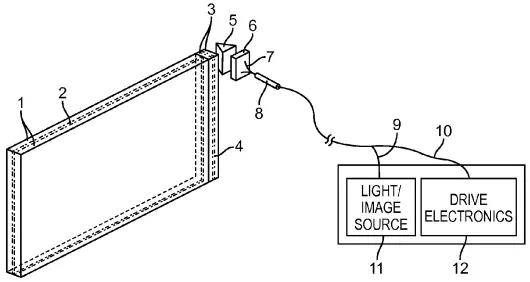

According to the patent literature, the optical devices used by Magic Leap are much smaller than the conventional projection systems used by HoloLens and Google Glass. As shown in the figure above, the light source is separate from the main body of the head mounted device, which is why we can speculate that the light source is in the portable module. Second, the device's lens system is also very small. Although the diagram is obviously not drawn in equal proportions, it must also indicate the approximate dimensions of the relevant components. The only element we really see is the lens. Comparing the widths of the 5, 6, 7, and 8 elements on the upper side of the figure, it is not difficult to see the relative size.

What does this mean? How can they shrink the optical part so much, while claiming that they can achieve light field display, high resolution and amazing vision? The answer includes two parts: the fiber-optic scanning display and the photonic light field chip.

Fiber scanning display

Fiber scanning displays are completely new display systems that have never been used in consumer electronics. We can only understand through its 2013 patent application documents. This patent application document has been around for some time, so some details about system performance may not be accurate, but the basic concepts should remain the same.

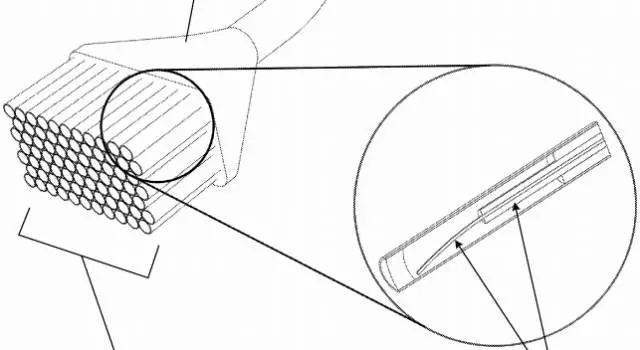

The system uses a set of actuating fibers to scan an image that is much larger than its numerical aperture. This is like an old CRT television, but instead of scanning the output electrons to the screen to excite the phosphor, it directly scans the output light. Scanning is achieved through piezoelectric actuators. The scanning frequency is maintained at about several tens of kHz. However, the actual image refresh rate is not that high. Because it requires multiple scans (for example, the patent raises, for example, 250 times), a full image is generated. This completely changed our concept of resolution. The image resolution of this technique depends on the scanning frequency of the fiber, the minimum spot size that the fiber can converge (which determines the pixel size), the number of scans required to generate an image, and the refresh frame rate. Taking into account the patent applications, their resolution for further optimization of the technology should be far better than existing consumer electronics.

A group of fiber optic scanning units are closely arranged in a bundle to increase the size of the display. Each fiber scanning unit has a width of 1mm.

In addition to resolution and frame rate, a wide field of view (FOV) is also the key to displaying realistic holographic images . With regard to this piece, there is an interesting description of the patent's background information section.

The field of view of a Head Mounted Display (HMD) can be determined by both the image size of the microdisplay and the viewing light path. The visual field of the human visual system is approximately 200° horizontally and approximately 130° vertically. However, most head-mounted displays only provide a field of view of about 40°... The arc resolution of about 50-60 arcseconds represents the 20/20 resolution of the eye chart. For high grades, see WIKI). The radian resolution is determined by the pixel density of the microdisplay. In order to match the general human vision system, the head-mounted display should provide 20/20 vision table resolution in the field of view with a horizontal angle of 40° and a vertical angle of 40°. Calculated in 50 arc seconds, equivalent to 8 million pixels (8Mpx). If the field of view is extended to 120° horizontally and 80° vertically, 50 million pixels (50Mpx) are required.

There are two things mentioned here. First, the resolution of consumer-level displays is much smaller than that required for large fields of view. This is why it is so hard for HoloLens to increase the field of view. Second, this shows Magic Leap's ambition. They want to provide a field of view with a horizontal angle of 120° and a vertical angle of 80° . This field of view is larger than the Oculus Rift's field of view, and at the same time, the resolution is far greater. Did they achieve it? It's still hard to say, but at least some technical parameters have been mentioned in the patent, and don't forget that these are three years ago. They are likely to have improved and improved this technology.

The pixel pitch is the distance from the center of one pixel to the center of an adjacent pixel. It limits the resolution of the image. Conventional microdisplays, such as used by HoloLens, have a pixel pitch of 4-5 microns. The pixel pitch limits the resolution of these displays and therefore limits the resulting field of view. Patent filings indicate that scanning fiber optic displays can generate a 0.6-micron pixel pitch, an order of magnitude improvement.

What resolution can you achieve? There is a paragraph in the patent mentioning a 4375x2300 resolution, but I think it's more than that. This is an example of a description of the basic method, followed by a discussion of the performance improvement of multicore fiber. I think its resolution will be much higher than this resolution. This is crucial for a wide field of view.

The last patent mentions the 120° field of view is particularly noteworthy:

The above-described techniques can be used to produce super-high resolution displays with head-mounted or other near-eye display modes with a wide field of view.

I think this has fully verified that the field of view will be at least greater than 40 degrees, and it is not unbelievable to approach its declared 120 degrees. If I want to bet, I bet 90°.

Photonic light field chip

The first time I heard that Rony Abovitz named his lens "Photonic Light Field Chip", I was depressed. Don't always give some unattractive names to these old things. Just call it a Rony lens. But as I gradually deepen my understanding, it is really not a simple lens. What does it do, and why is it more interesting than other lenses? Let us first understand the diffractive optical element.

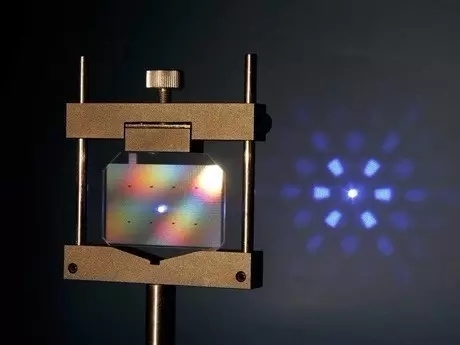

An example of a diffractive optical element

Diffractive optical elements (DOEs) can be thought of as a group of very fine lenses. They can be used to shape, split, homogenize, and diffuse. Magic Leap uses a linear diffraction grating with a circular lens to split light and generate a specific focal length beam. That is, it directs light into your eyes and makes them appear to be emitted from a correct focal plane. As the saying goes, it's easy to say, but it's hard to say. At least the patent document I found was written like this.

In order to generate a light field, Magic Leap uses two discrete components to configure a photonic chip. One element (6 in the schematic) extracts the projection light and inserts it into the second element (1 in the schematic). The second element introduces light into the human eye.

Both of these components use DOEs to do their work. The main problem with DOEs is that they are carefully tuned to perform a specific job. They cannot work at different wavelengths and cannot change to different focuses in real time. To solve this problem, Magic Leap stacks a set of DOEs optimized for different wavelengths and focal planes as a large lens group. These DOEs are very thin, with a wavelength of light at a scale, so adding together does not make the device too thick. This is why this optical system is called a chip. Magic Leap can switch different layers of DOEs. In this way, they can change the path that light uses to reach the human eye. This is how they change the focus of the image to create a real light field. As the patent says:

For example, when opening the first of a group of DOEs, an observer looking from the front can produce an optical observation distance of 1 meter. When the second DOE in a group is opened, an optical observation distance of 1.25 meters can be generated.

You may feel that this technique is very limited, especially when you need a lot of layers to produce images with different focus... but that's not really the case. Different combinations of DOEs can produce different outputs. Not a DOE corresponds to a focal plane, but a DOEs combination corresponds to a focal plane.

Changing the active layer in the DOEs group will change the light emitted from the photonic light field chip.

They are likely to have many more layers than the figure, but the specific number of layers will be known to the world.

In the end, we learned how Magic Leap realized its previous use of light to create darkness. We use an outside DOE and an inside DOE to cancel outside light like active noise canceling headphones. The patent says this:

This can be used to offset planar lightwaves similar to background light or real-world light, to some extent similar to active noise canceling headphones.

So why is this a chip? Alas, a typical electronic chip changes the flow of electrons based on certain conditions. Magic Leap's photonic light field chip changes the path of photons based on certain parameters. I think it is a kind of chip.

What are we missing? We have a photonic light field chip, with high resolution projections, but how to construct an image. This is by combination. The image is drawn in layers so that different parts are projected onto sub-images with different focal lengths. That is, each frame is constructed by multiple scans, and each focal plane is drawn separately.

Magic Leap tries to implement three functions on the camera. The first one is the most obvious, a camera that can generate everyday images. This is the easiest camera technology they use, and they may be using the latest similar sensors on the smartphone market. Whether this camera is on the glasses or on the portable module is still unknown, but there is always one that can take pictures.

The other two functions are very interesting. Magic leap repeatedly mentioned that his device has the ability to understand the world around him . In an interview, it was mentioned that the device can recognize objects such as knives and forks. To do this, they need a set of cameras. We can look at HoloLens who has done a good job in this area. HoloLens has a set of four environment-aware cameras and a depth camera. We can get further confirmation from Magic Leap's patent document.

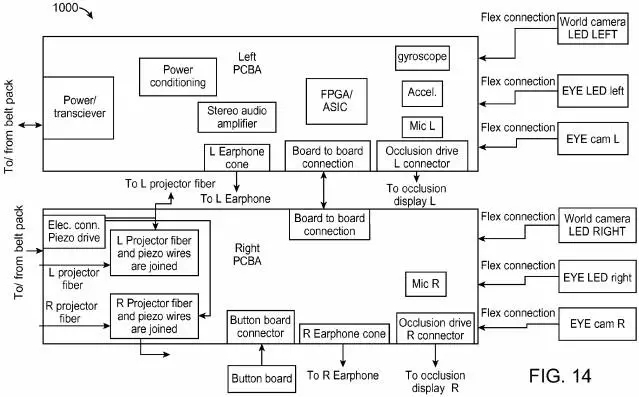

The schematic shows the two components on the left and right lens temples. Above is the left temple and below is the right temple.

From the above schematic, we can find two outward cameras, called "world camera". The textual description of the patent implies that there may be more than two cameras, the text of which is “ one or more cameras facing the outside or providing a world perspective (on each side) â€. For the time being, I don't know how many cameras there are and I don't know how small Magic Leap will make these components. But what we know is that these should be placed on eyeglasses, and it is very important for SLAM.

The function of the last camera can also be found in the schematic above. At least two cameras are required to shoot the eyes. This is used to track the line of sight and eye movements in order to obtain focus and line of sight . An infrared LED will also illuminate the two cameras. Eye tracking is important for user interaction.

I think the question “What are you looking at?†should be very important to how you interact with Magic Leap. This will be its main interactive tool, like a computer mouse.

Of course, I can't verify whether this information is true or false right now, but when summed together, it really feels like something Magic Leap is doing. Whether or not the final product will succeed in the consumer market, this is indeed a true innovation in a technology industry that has not been seen for quite a while. I am really excited to see what kind of adventures they have, and I also look forward to the impact of the product in the industry.

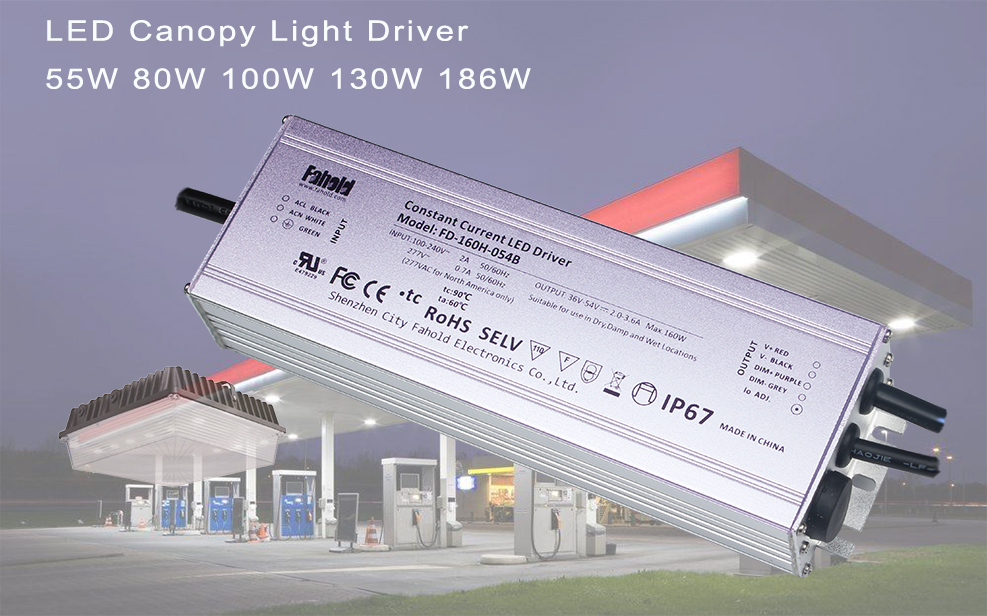

LED Canopy Light Driver

This Aluminum Case rugged-designed LED Driver for canopy fixture will outlast the competition. With light output of a typical 50W-200W metal halide it is an energy efficient replacment . Gas Station will always need high stand and very good quality Driver for the light hanging there. Fahold design safety, glued high power driver, avoiding the dangerous situation.

-UL listed for wet locations

-Solid construction die cast aluminum body

-UV stabilized powder coated finish

Efficiency up to 93%

LED Canopy Light Driver, Gas Station Light, Waterproof Driver, IP67 LED Driver, Canopy Light

ShenZhen Fahold Electronic Limited , https://www.fahold.com